Ideation

Idea Prioritization

Idea Scoping

Ideation Synthesis

Divergent Ideation

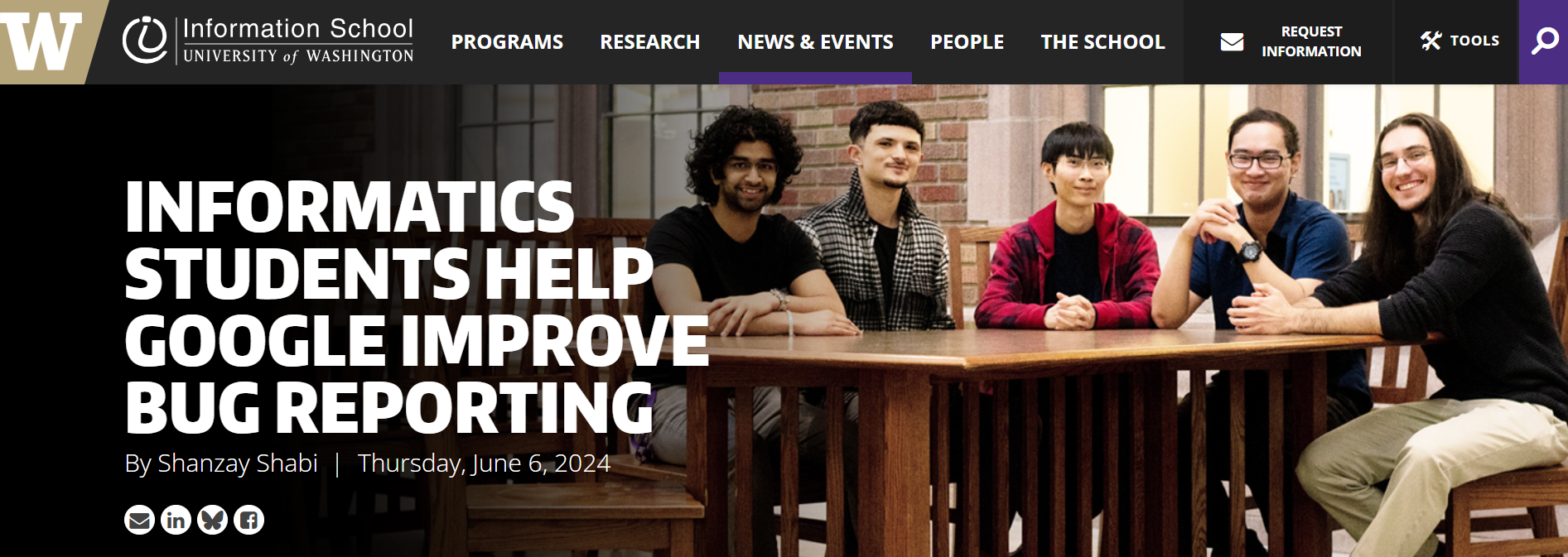

Leveraging our research insights, I facilitated an ideation workshop that began with individual brainstorming to encourage diverse thinking before converging as a team.

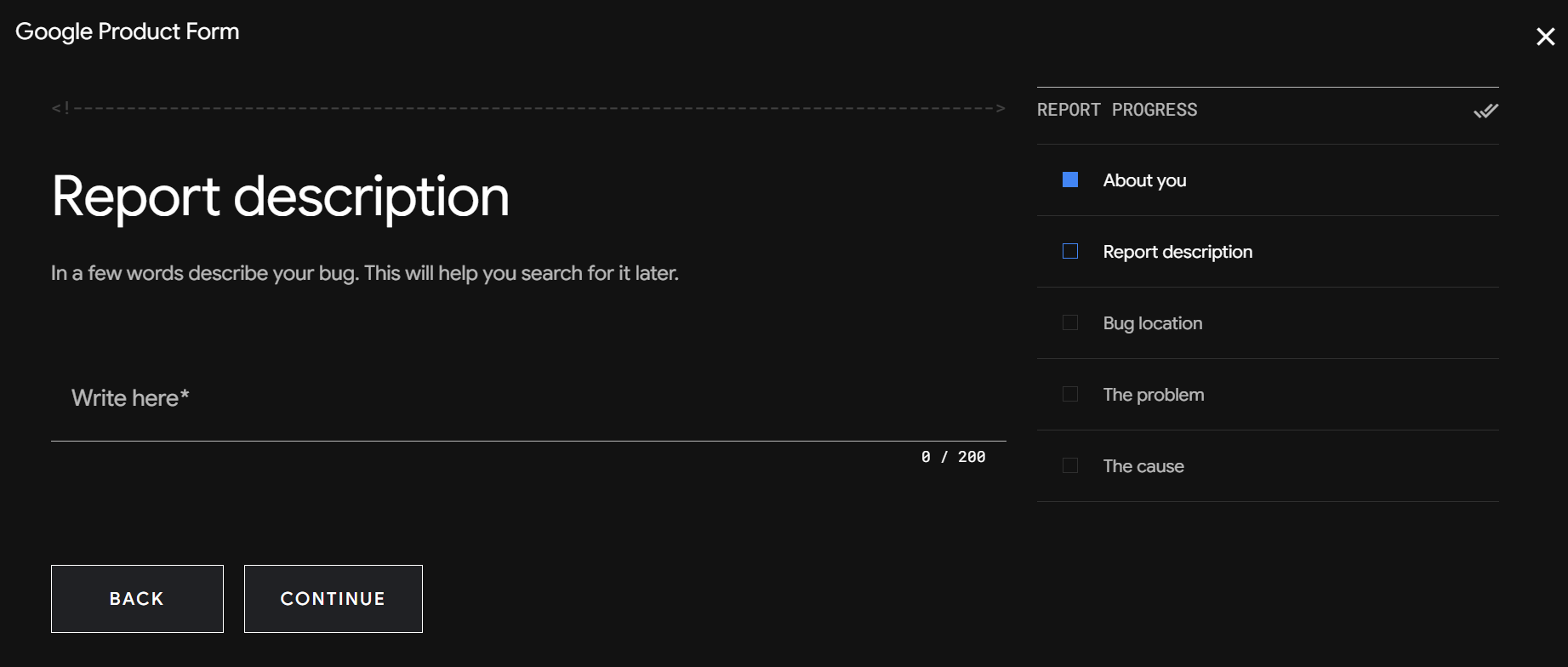

My concepts focused on enhancing the existing VRP form through clearer guidance and progress tracking, supported by AI-driven insights that help reporters understand the novelty and quality of their submissions.

Several of my ideas are outlined below:

Content Flagging

Flag unacceptable form content

AI Gap Filling

AI recommends missing content

Collaborative Reporting

Hunters build reports together

Report Assistant

Conversational focus AI assistant

Form Walkthrough

Structured report onboarding

Dynamic Incentive

Adjust reward based on quality

Dynamic Templates

Report specific templates

Progress Tracking

Report progress & AI use metrics

Playbook Website

Provide reporting options & outcomes

Attribute Summary

Summarize missing report attributes

Automated Deduplication

Detect duplicate content in real time

Incentivized Structuring

Content based reward progression

Report Chatbot

Answers report content questions

Report Alerts

Alert if content violates guidelines

I led a convergent ideation workshop with my team to provide focus to our 34 ideas. I guided the team to identify unique solutions, combine adjacent pairs, and unify similar ideas together. Parent and children ideas were visualized accordingly.

I noticed two possible solution mediums emerge: form and portal. Form features built off the existing report form structure. A portal could offer a larger range of reporting support inclusive of form specific features.

All these ideas and potential mediums raised many design, technical, and impact questions. We needed to find focus and prioritize to hone our project vision.

I created an impact-feasibility matrix to visually prioritize what ideas the team could pursue. I led a co-working session with our engineers to scope technical feasibility while I provided research and design insights.

This process allowed the team to dynamically prioritize ideas, identify constraints, and ensure our next steps provided high impact to Bug Reporters and Android Security stakeholders.

Automated Duplicate Check

Automatically detect content similarity and notify bug reporters as they fill out their report

Automated Flagging & Guidance

AI provides real-time guidance to structure input fields while flagging low-quality content

Automated Flagging & Guidance

AI provides real-time guidance to structure input fields while flagging low-quality content

Automated Flagging & Guidance

AI provides real-time guidance to structure input fields while flagging low-quality content

Explicit Form Instructions

Provide formatting guidance for each input filed with specific content instructions

Form Walkthrough & Examples

Provide a guided walkthrough of each report field with validated high-quality examples

Placeholder Templates & Content

Include bug-specific report templates with placeholder content for a robust launch point

Automated Flagging & Guidance

AI provides real-time guidance to structure input fields while flagging low-quality content

Collaborative Bug Reporting

Connect reporters together to build a bug report in unison and share potential rewards

Report Progress Tracking

Track report progress and AI involvement metrics to validate report efficacy

Automated Report Querying

Automatically request missing content from reporters via a secondary submission

Confirm Experience Level

Gauge reporters experience level to boost signal on potential quality variance

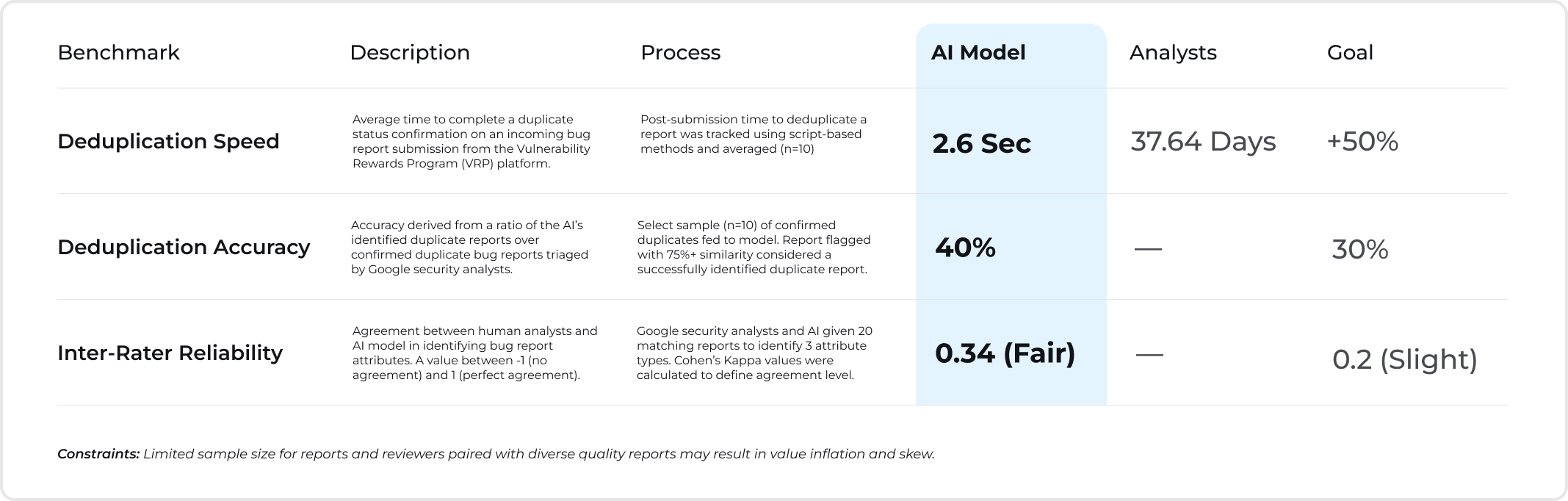

Through iterative discussions with Google, I determined a strategic focus on automating duplicate bug report checks for reporters based on time constraints and business priority.

This direction addresses critical pain points, including the lack of visibility into duplicate status and the resulting surge in redundant bug report submissions. The remaining features were documented for future investigation.

.jpg)

.png)

.png)

.png)

.jpg)

%20(1).png)

.png)

%20Intro.png)

%20Intro.png)

%20Intro.png)